CASE STUDY

From Black Box to Glass Box

Redesigning processing job visibility at Relativity. Turning silence into proof of life.

Senior Product Designer · Relativity · 2023-2024 (Shipped)

8 → 2

False-positive stuck-job incidents per week

75% reduction, exceeding the 50% OKR target. ~300 fewer unnecessary support calls/year.

THE PROBLEM

Processing jobs felt like a black box

Relativity processes millions of documents per case for law firms, government agencies, and corporate legal teams across 40+ countries. The Processing Set page is where users monitor data ingestion jobs that can run for hours.

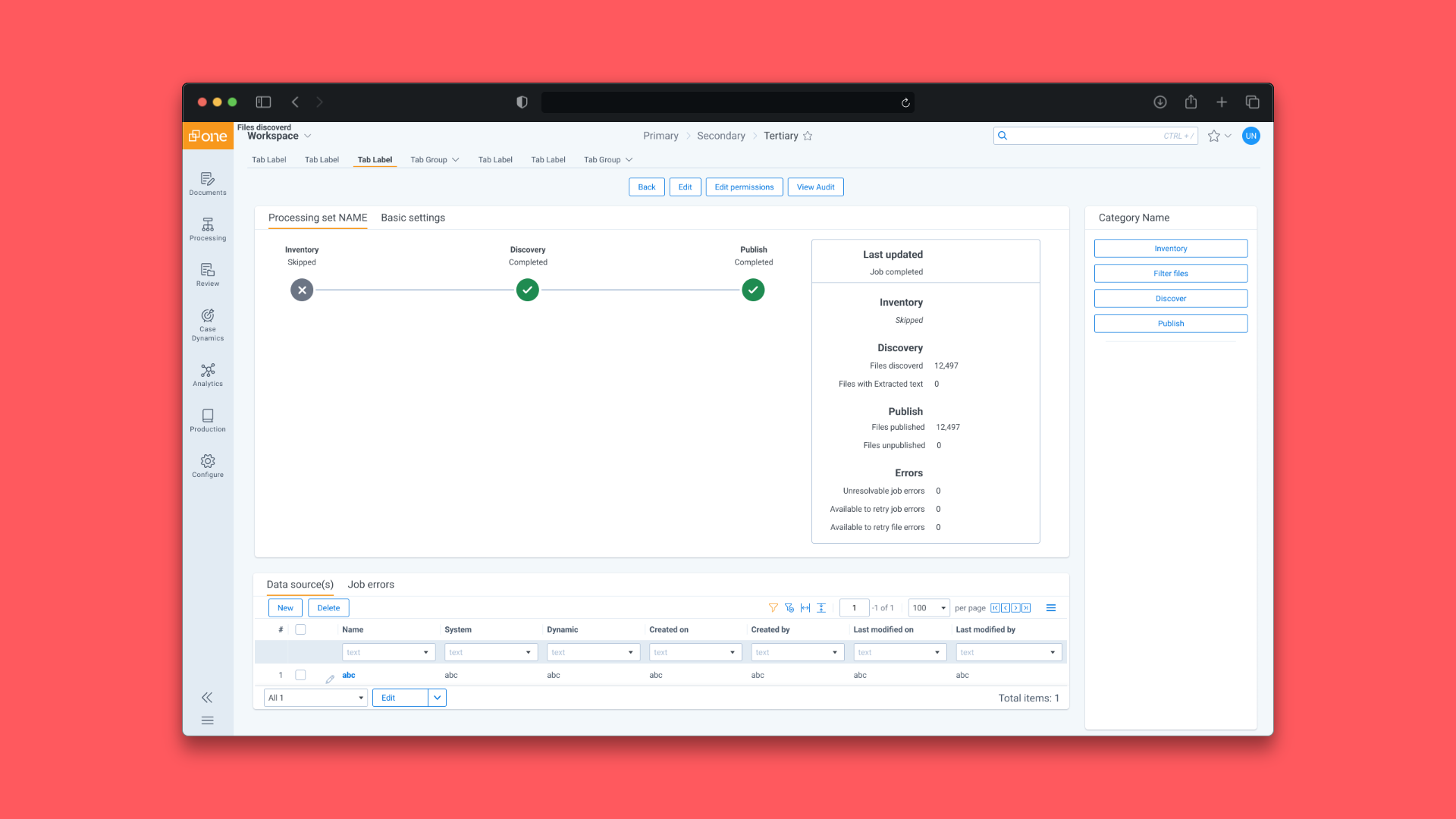

The page showed three status bubbles, a sidebar with basic stats, and not much else. When a job completed, there was no record of what happened during processing. When a job was running, users had no way to tell if the system was grinding through OCR on a 600MB PDF or genuinely stuck.

We were averaging 8 false-positive stuck-job incidents per week. Users would call support asking "is my job stuck?" Support would check, say "give it more time," and the job would finish on its own. The OKR target was to cut that to 2.

This was the #1 priority for the entire Enrichment domain in 2024. The reasoning from leadership: if the platform isn't the most stable for complex matters, customers won't trust it, and the value of every other product initiative drops.

DISCOVERY

The system was working. It just wasn’t showing its work.

I categorized Q2 support incidents by root cause with PM Eric Wendt. Over half came down to OCR visibility.

London workshops

In April 2024, I facilitated Job Visibility workshops with Fujitsu, Lineal, PwC UK, and KPMG UK. At PwC, the room was too small for the full group. I pivoted on the spot, rebuilt the exercise in FigJam, and ran it digitally across two rooms.

Winston & Strawn: Inspiration Mining

In August 2024, one month before Milestone 2 shipped, I ran a full-day onsite at Winston & Strawn's Chicago office using a workshop format I developed called Inspiration Mining. Rather than asking what's wrong, participants identified what they liked about competing tools and why. We peeled back the layers to find transferable concepts, not feature requests.

"Relativity taking time to come on-site for this type of workshop is very meaningful to us."

— Winston & StrawnUsers didn't need a dashboard. They needed proof of life.

"Show me that something changed in the last few minutes, and I'll give you the benefit of the doubt."

DESIGN

Five decisions that built trust back in

1. Break progress into sub-phases

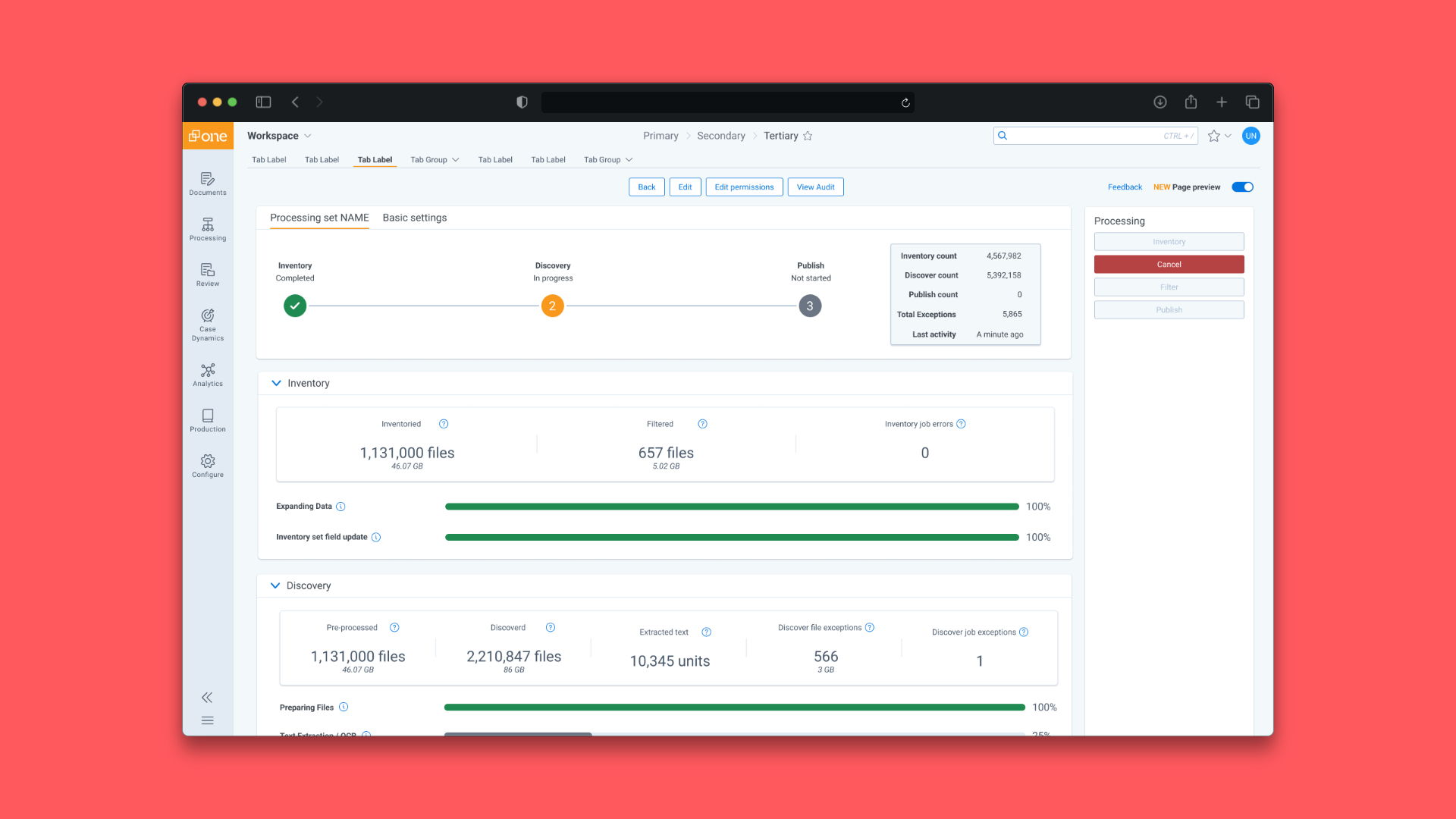

We replaced the single progress bar with three collapsible sections—Inventory, Discovery, Publish— with sub-phase progress bars, descriptions, data source counts, and elapsed time.

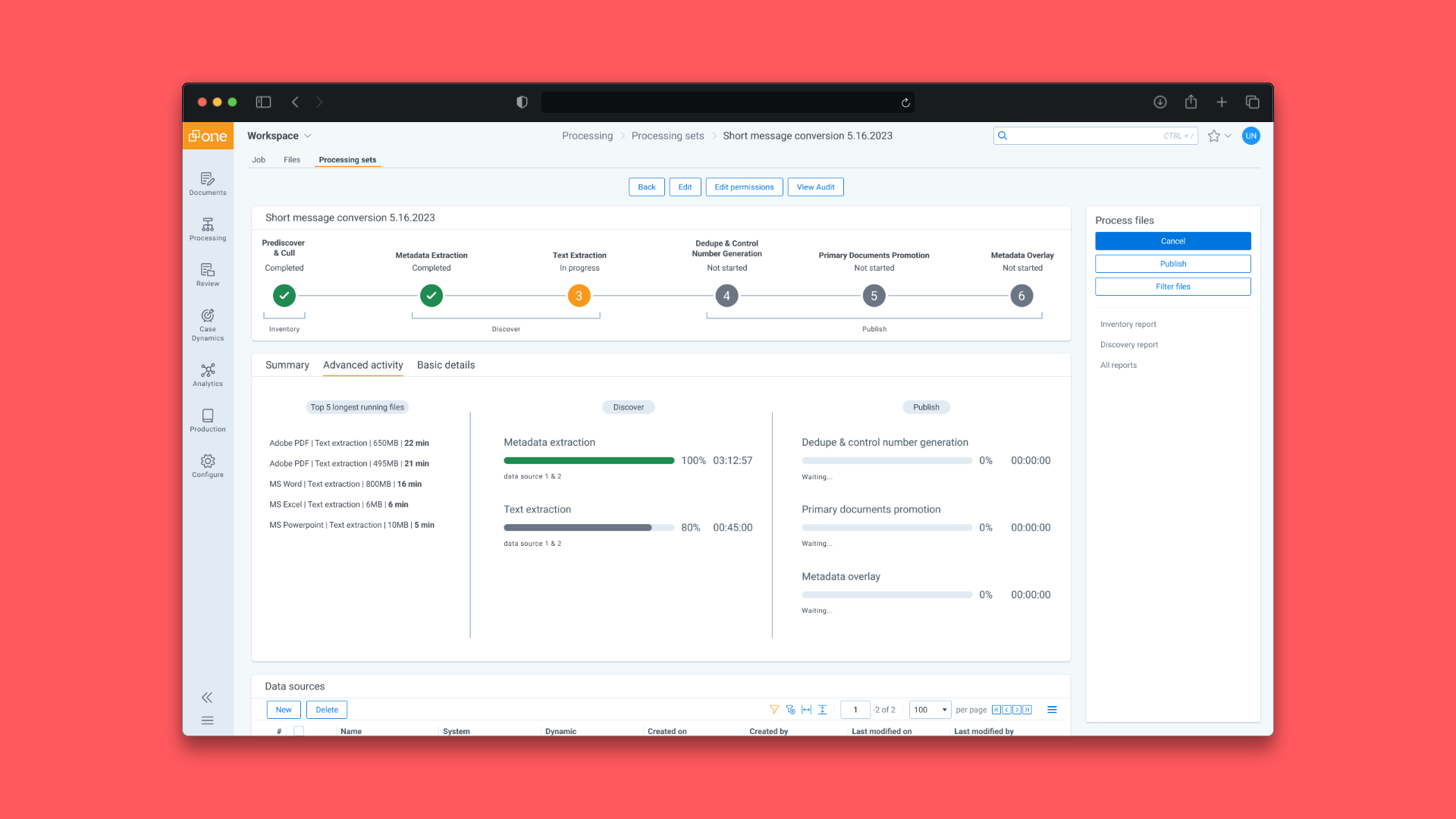

An early concept explored a six-step stepper with a "Top 5 longest running files" leaderboard. We pulled back to the collapsible layout after feedback showed users wanted density and familiarity.

2. Rename errors to exceptions

"Errors" implies the system failed. In most cases, these were expected outcomes the system handled correctly. The label was wrong. We renamed them to "exceptions" and added info-icon tooltips with byte-size context. A small change with outsized impact: it shifted the default interpretation from "something broke" to "here's what the system found."

3. Ship a toggle, not a switch

These changes touched UX in place for 10+ years. We built a user-controlled toggle. Turning it on surfaced an enablement modal explaining what the preview was, why we built it, and how to give feedback. Turning it off prompted a feedback modal tagged in NewRelic. The opt-out reasons were more useful than any survey.

4. Surface environmental factors

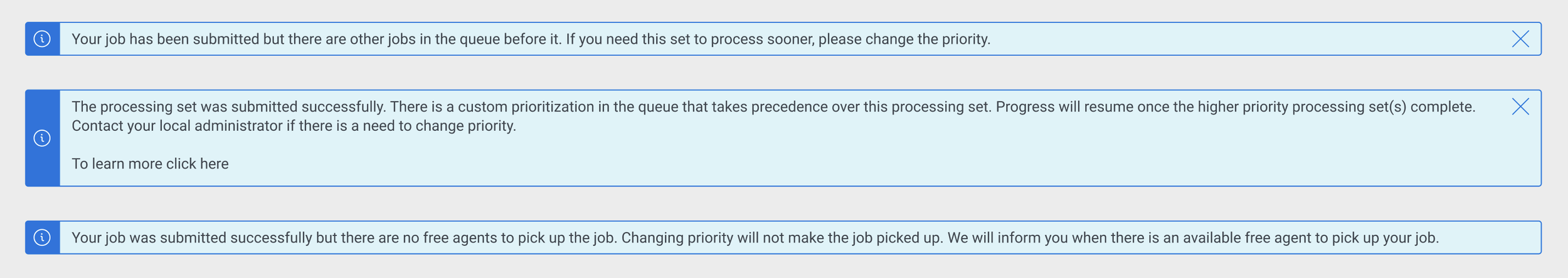

Milestone 2 addressed the 15% of incidents from factors outside the user's job. We designed informational banners—not warnings—for queue contention, deprioritization, and publish throttling. The distinction matters: we're telling users what the system is doing, not recommending action.

5. Start with the smallest proof of life

Before sub-phase bars, we shipped a "Last Updated" timestamp. If users could see the numbers changed recently, would that hold off a support call? It did. This incremental pattern defined the project: ship the smallest thing that shifts confidence, measure, then layer on.

ROLLOUT

Phased rollout, earned trust

REFLECTIONS

What I learned

Language is UI

"Error" triggered a support call. "Exception" set an expectation. One word. One of the most impactful decisions in the project.

Ship the toggle before the feature

User control over adoption = respect for workflows + a free feedback channel. Opt-out reasons beat surveys.

Workshops compound

London validated job visibility and solidified Milestone 2. But it also fed message conversion, error workflows, and the broader roadmap.